Grounding Language Models: A Journey into the Foundation of LLMs

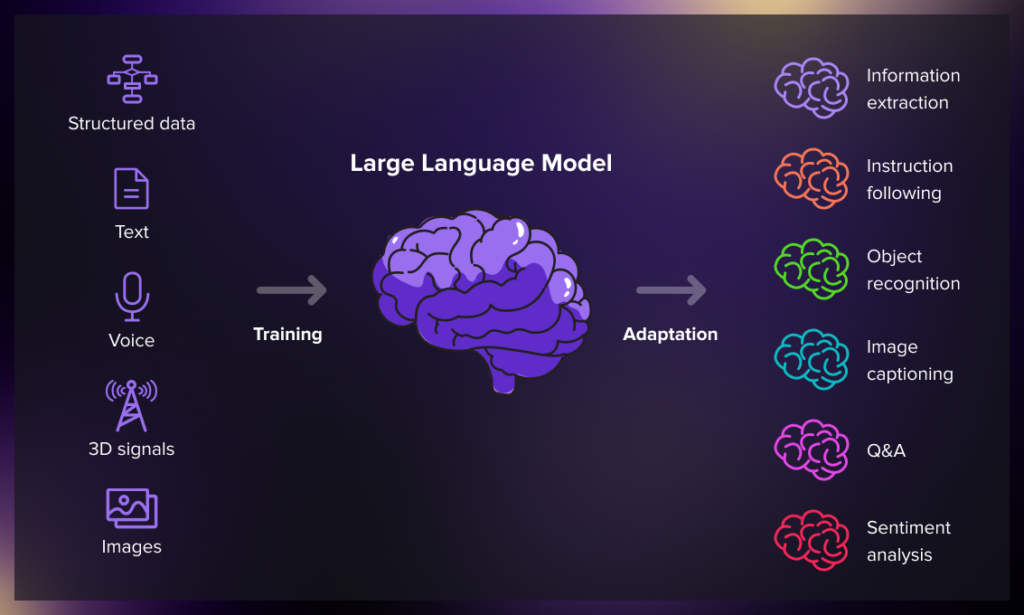

In the ever-evolving landscape of artificial intelligence, language models have appeared as powerful tools, shaping how we interact with technology. One fascinating aspect of these models, such as Large Language Models (LLMs), is their grounding – the foundation that influences their understanding of the world. Join us on a captivating exploration into the depths of grounding LLMs, uncovering the significance of their roots in shaping intelligent conversations.

According to Microsoft Tech Community the primary motivation for grounding is that LLMs are not databases, even if they possess a wealth of knowledge. They are designed to be used as general reasoning and text engines. LLMs have been trained on an extensive corpus of information, some of which has been retained, giving them a broad understanding of language, the world, reasoning, and text manipulation. However, we should use them as engines rather than stores of knowledge.

Understanding Grounding

In language models, grounding refers to the procedure by which these models establish connections with real-world knowledge and experiences. With their extended neural networks, LLMs are trained on colossal datasets, allowing them to comprehend and generate human-like text. However, the crux of their proficiency lies in how well they are grounded in the underlying concepts that define language.

The Roots of Knowledge

To truly appreciate the capabilities of LLMs, it’s crucial to delve into the sources of knowledge that form their grounding. These models are trained on diverse datasets encompassing literature, articles, websites, and even social media, reflecting the richness and complexity of human language. The synthesis of this information equips LLMs with a broad spectrum of knowledge, allowing them to navigate various topics with finesse.

Retrieval-Augmented Generation in Language Models

Hybridizing Generation and Retrieval

Retrieval-augmented generation merges the generative power of language models with the knowledge retrieval capabilities of external sources, creating a synergistic procedure that goes beyond traditional language models.

Improved Context Understanding

This approach enriches the language model’s understanding of context, ensuring that generated content is accurate and contextually relevant. This enhanced contextual awareness is a significant leap forward in natural language processing.

Addressing Knowledge Gaps

Retrieval-augmented generation overcomes limitations in handling rare or specialized topics by dynamically fetching information from external databases. This capability enables language models to generate comprehensive and accurate content across various subjects.

Enhanced Factuality and Credibility

Leveraging retrieval mechanisms allows language models to fact-check and verify information by cross-referencing it with external sources. This results in more reliable and credible content, vital for applications such as question-answering and content creation.

Adaptability to Dynamic Information

In a rapidly evolving world, Retrieval-Augmented Generation enables language models to adapt to dynamic information by accessing real-time or updated data during the generation process. This ensures that the generated content reflects the most recent and relevant information.

Applications in Conversational AI

Retrieval-augmented generation finds vast applications in conversational AI, enhancing contextual understanding and data accuracy. This approach allows language models to engage in more coherent and context-aware conversations, delivering responses that mimic human-like comprehension.

Motivation for Grounding

The impetus for grounding language models (LLMs) arises from acknowledging that, despite their extensive knowledge, these models aren’t static databases but rather versatile reasoning and text engines. LLMs, trained up to a specific point in time and limited to publicly available information, face challenges like temporal knowledge constraints and access limitations. Grounding, mainly through Retrieval Augmented Generation (RAG), addresses these limitations by infusing task-specific information in real time. While fine-tuning remains an option, it is increasingly seen as a less favored, time-consuming approach, with RAG emerging as a pragmatic and efficient method for enhancing LLMs’ adaptability and versatility in diverse use cases.

Real-world Applications of LLMs Grounding

- Question Answering Systems: LLMs with robust grounding capabilities excel in question-answering tasks. These models can understand and reply to user queries with a higher degree of accuracy by connecting the language input to relevant real-world information.

- Virtual Assistants: Grounded LLMs are the backbone of virtual helpers like Siri, Alexa, and Google Assistant. These systems use grounding to interpret user commands, extract meaning, and provide appropriate responses or actions.

- Content Generation: LLMs are increasingly used for content generation in various domains. Whether writing articles, creating marketing copy, or generating code snippets, the grounding capability ensures that the generated content aligns with real-world facts and requirements.

- Sentiment Analysis: Grounded language models are crucial in sentiment analysis applications. By understanding the context and grounding sentiments in real-world scenarios, these models can accurately assess the emotional tone of a piece of text.

- Automated Customer Support: LLMs with grounding capabilities enhance automated customer support systems. They can understand client queries, extract relevant details, and deliver detailed reactions or direct users to appropriate resources.

The Role of Context

One of the critical elements of grounding LLMs is the emphasis on context. These models excel in understanding the context in which a word or phrase is used, enabling them to generate coherent and contextually relevant responses. This contextual grounding mimics human-like comprehension, contributing to the natural flow of conversations and interactions.

Navigating Ambiguity

Language is inherently ambiguous, often requiring contextual cues for accurate interpretation. With their grounding, LLMs tackle ambiguity by drawing on their extensive training data to infer the most probable meanings. This ability to navigate ambiguity reflects the adaptability and robustness of grounded language models in handling diverse linguistic scenarios.

Applications of Grounding LLMs

The impact of grounding LLMs extends across various domains, influencing the development of applications that enhance human-machine interactions. From chatbots and virtual assistants to content invention and translation services, the grounded nature of LLMs underpins their versatility and utility in addressing real-world challenges.

Challenges and Ethical Considerations

While the capabilities of grounding LLMs are awe-inspiring, they also raise important considerations. The potential biases present in training data, ethical concerns surrounding content generation, and the responsibility of developers to ensure unbiased and ethical AI applications are challenges that need careful navigation.

Final Thoughts

Grounding LLMs represents a cornerstone in the evolution of artificial intelligence, unlocking new possibilities for human-machine collaboration. As we continue to harness the power of language models, understanding and purifying their grounding will be crucial in shaping the next generation of intelligent systems.

The journey into the foundation of LLMs is not just a technical exploration but an invitation to contemplate the intricate interplay between language, knowledge, and the boundless potential of artificial intelligence. For more insightful blogs visit auxin.io