Unveiling the Multimodal Marvel: How LLMs Redefine Understanding

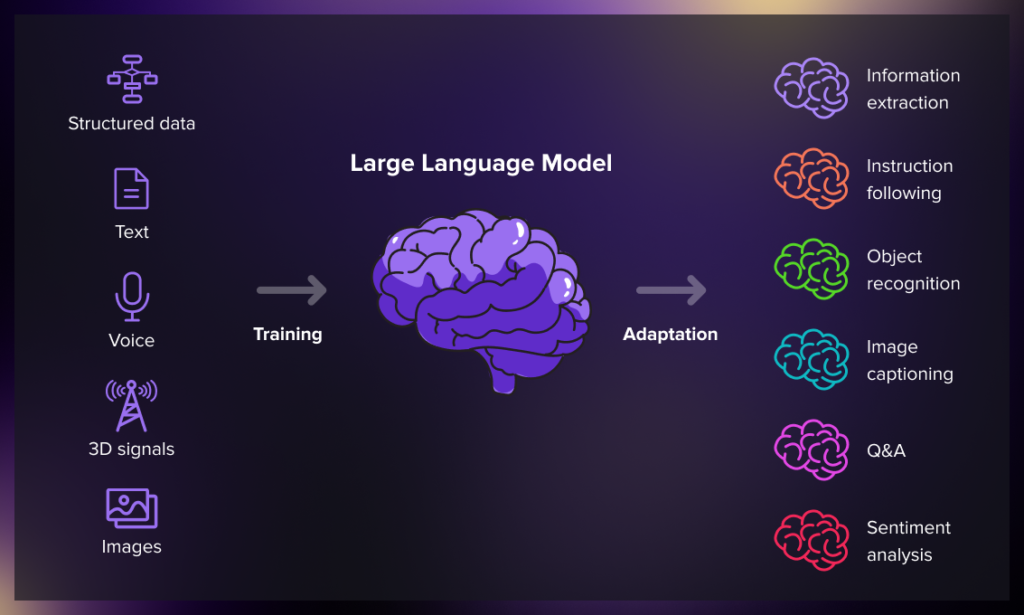

In the ever-accelerating realm of artificial intelligence, a groundbreaking evolution is reshaping the landscape, large language models (LLMs) are transcending the confines of text and venturing into the realm of multimodality. This paradigm shift heralds a new era where these linguistic giants decipher words and seamlessly navigate the complex interplay of images, sounds, and more. As we embark on this exploration, it becomes evident that these are no longer confined to textual understanding; they orchestrate a symphony of meaning across diverse modalities, transforming how we interact with and interpret the digital world. Let’s unravel the intricacies of this multimodal revolution and discover the profound impacts that this brings to the forefront of artificial intelligence.

Understanding Multimodality in LLMs

Traditionally, language models were linguistic geniuses adept at separating written text details. However, the paradigm has dramatically shifted with the advent of Large Language Models. These sophisticated models are not content with linguistic excellence alone; they break barriers by integrating diverse inputs, venturing into the expansive realms of images, audio, and beyond. This radical evolution in multimodality represents a fundamental departure from the conventional philosophy of artificial intelligence, challenging the notion that context should be confined solely to the domain of words. In this new era, LLMs redefine context, embracing a holistic understanding that transcends linguistic boundaries and embraces the rich tapestry of multimodal information.

LLMs with Image Understanding and Audio Processing

Witness the cooperation of language and vision. LLMs, like graceful conductors, interpret not just words but images, unlocking a new dimension of understanding. From describing complex scenes to recognizing objects, LLMs paint vivid images with their linguistic brushstrokes.

Beyond the visual, LLMs lend their ears to audio nuances. Speech recognition, emotion detection, and even musical composition—their auditory skill knows no bounds. It’s a symphony where language becomes the bridge between the visual and the auditory realms.

Breaking Language Barriers and the Practical Applications

Multimodal LLMs are polyglots of the digital world. They don’t just speak one language; they comprehend the nuances of many. This multilingual mastery dismantles language barriers, fostering global communication and understanding.

From healthcare diagnostics through medical images to enhancing accessibility through speech recognition, the real-world applications of multimodal LLMs are staggering. Witness the convergence of human and technological intelligence in solving complex problems.

Ethics in Multimodality: Navigating Challenges

Amidst our wonder at the cool things multimodal AI can do, we’ve got to think about the right way to use it. Imagine this: how do we make sure these innovative models don’t show favoritism or unfairness? And here’s another tricky bit: can they grasp all the different customs and ways of thinking from different places? Figuring out the answers to these questions is super important as we set sail into multimodal AI’s new and unexplored territory. It’s like ensuring our high-tech tools are intelligent, fair, and respectful to everyone.

The Journey Ahead: Innovations on the Horizon

The exploration of multimodal impacts is an ongoing saga. What innovations lie on the horizon? Will we see LLMs choreographing entire virtual worlds? The journey has just begun, and the possibilities are as vast as the digital cosmos.

In this symphony of words, images, and sounds, multimodal LLMs redefine how we interact with AI. It’s not just about understanding; it’s about experiencing the digital world in all its richness. As we delve deeper into this realm, the question isn’t just what LLMs can do, it’s what they can inspire us to imagine. Welcome to the era where language models are not just tools but storytellers of the digital age. For more insightful blogs, visit auxin.io