K3s and Security Considerations in Modern DevOps Environments

Container orchestration plays a pivotal role in modern IT infrastructure, revolutionizing how applications are deployed, managed, and scaled. It enables organizations to automate containerized applications’ deployment, scaling, and management, streamlining operations and improving efficiency. At the forefront of container orchestration are two popular platforms: K3s and K8s. K3s, a lightweight Kubernetes distribution, offers a simplified approach to Kubernetes, ideal for resource-constrained environments and edge computing scenarios. On the other hand, K8s, also known as Kubernetes, is a mature and robust container orchestration platform widely adopted by enterprises for its scalability, flexibility, and extensive ecosystem. These platforms empower organizations to harness the power of containers and microservices, driving innovation and agility in today’s rapidly evolving IT landscape.

Understanding K3s and K8s

K3s and K8s, renowned container orchestration platforms, offer distinct approaches to managing containerized applications.

| K3s | K8s |

| K3s incorporates the Kubernetes API server, controller manager, scheduler, etcd, and kubelet into a single binary, eliminating the need for separate installations of these components. This streamlined architecture reduces memory and disk space requirements. | K8s typically involve a larger number of components and dependencies such as kubelet, etcd and API server, resulting in a more complex deployment; each component is installed separately, often requiring more resources and management overhead. |

| K3s prioritizes ease of use | K8s excels in scalability and feature richness |

| It uses sqlite3 as a data store for configuration storage of resources | It uses etcd3 as a data store for configuration storage of resources |

| Used for: IoT devices Embedded devices Edge computing Development environments | Used for: Large-scale app deployments Microservice architecture Big data processing Machine learning |

Minimal resource utilization is the factor of K3s that outshines K8s. For instance, while a typical Kubernetes cluster may require several gigabytes of RAM and significant CPU resources to run, a K3s cluster can operate efficiently with much fewer resources. By leveraging a smaller memory and CPU footprint, K3s enable organizations to deploy Kubernetes clusters in environments with limited resources, reducing infrastructure costs and improving overall efficiency. Additionally, K3s offers simplified management and maintenance, making it well-suited for edge computing, IoT, and development environments. Ultimately, the choice between K3s and K8s depends on the company’s requirements and constraints of the deployment, with both platforms offering unique advantages for orchestrating containerized applications.

Security Challenges and Considerations for K3s

When it comes to securing K3s environments, several common challenges may arise, necessitating proactive measures to mitigate potential risks. One common concern is unauthorized access to the K3s control plane, which could lead to unauthorized configuration changes or data breaches. Additionally, vulnerabilities in container images or the underlying operating system pose significant security risks, potentially enabling attackers to exploit vulnerabilities and compromise the integrity of the system.

Furthermore, inadequate network segmentation and weak authentication mechanisms may expose K3s clusters to unauthorized access or lateral movement by malicious actors. To address these challenges, organizations deploying K3s environments should prioritize security best practices such as implementing robust authentication mechanisms, enforcing least privilege access controls, regularly updating and patching container images and underlying infrastructure, and implementing network segmentation to limit access to sensitive resources. Following are some security considerations that can make your k3s container more secure:

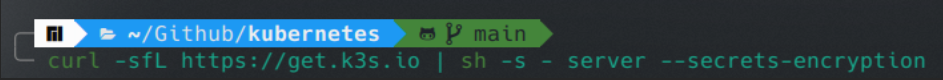

SECRETS ENCRYPTION CONFIG

K3s supports enabling secrets encryption at rest. When first starting the server, passing the flag –secrets-encryption will do the following automatically:

- Generate an AES-CBC key

- Generate an encryption config file with the generated key

- Pass the config to the KubeAPI as encryption-provider-config

Enable Encryption

The encryption can be enabled at the cluster configuration when creating a cluster using the –secrets-encryption flag when starting the server.

curl -sfL https://get.k3s.io | sh -s – server –secrets-encryption

Secrets-encryption cannot be enabled on an existing server without restarting it.

POD SECURITY

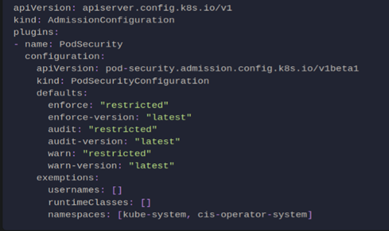

Pod Security Admission helps to restrict the behavior of pods inside the cluster. The policy should be written to a file named psa.yaml in /var/lib/rancher/k3s/server directory. Here is an example of a compliant PSA:

NETWORK POLICIES

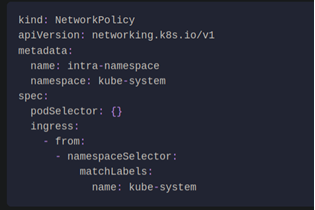

CIS requires that all namespaces have a network policy that reasonably limits traffic into namespaces and pods. The metrics-server and Traefik ingress controller will be blocked by default if network policies are not created to allow access.

Network policies should be placed in the /var/lib/rancher/k3s/server/manifests directory, where they will automatically be deployed on startup. Here is an example of a compliant network policy.

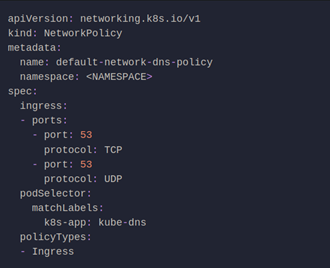

With the applied restrictions, DNS will be blocked unless purposely allowed. Below is a network policy that will allow for traffic to exist for DNS.

The metrics-server and Traefik ingress controller will be blocked by default if network policies are not created to allow access. Traefik v1, packaged in K3s version 1.20 and below, uses different labels than Traefik v2. Ensure that you only use the sample yaml below associated with the version of Traefik on your cluster.

CONTROL PLANE NODE CONFIGURATION FILES

These files provide details and configuration for the control plane, so their security is critical for the cluster.

The following files should have permission set to 644 and ownership to root:root:

- API server pod specification file

- Controller Manager pod specification file

- Scheduler pod specification file

- Etcd pod specification file

- Container Network Interface file

- Scheduler. conf file

- Controller-manager. conf file

- Kubernetes PKI certificate

Also, ensure that the etcd data directory permissions are set to 700 or more restrictive and its directory ownership is set to etcd:etcd

Ensure that the admin.conf file permissions are set to 600 or more stringent, and its file ownership is set to root: root

Ensure that the Kubernetes PKI key file permissions are set to 600

DEFAULT NAMESPACE

The default namespace should not be used. Ensure that namespaces are created for appropriate segregation of Kubernetes resources and that all new resources are created in a specific namespace.

Wrapping Up

In my opinion, by addressing shared security challenges head-on and implementing proactive measures, we can mitigate risks and ensure the integrity and confidentiality of our K3s deployments. Leveraging security tools and solutions tailored for Kubernetes environments empowers us to continuously monitor for security threats and vulnerabilities, enabling us to respond swiftly to emerging risks. As we navigate the complexities of container orchestration and security, prioritizing a proactive and comprehensive approach to security is essential in safeguarding our K3s environments. For more insightful blogs, visit auxin.io